🚀 huggingface/transformers - Release Notes

Deepseek v3 (based on 4.50.3) (2025-03-28)

A new model is added to transformers: DeepSeek 3 (Also known as DeepSeek R1).

It is added on top of the v4.50.3 release, and can be installed from the following tag: v4.50.3-DeepSeek-3.

In order to install this version, please install with the following command:

```

pip install git+https://github.com/huggingface/transformers@v4.50.3-DeepSeek-3

```

If fixes are needed, they will be applied to this release; this installation may therefore be considered as stable and improving.

## DeepSeek 3 (Also known as DeepSeek R1)

The model is detailed in the following [paper](https://huggingface.co/papers/2501.12948).

## Overview

The DeepSeek-V3 model was proposed in [DeepSeek-V3 Technical Report](https://arxiv.org/abs/2412.19437) by DeepSeek-AI Team.

The abstract from the paper is the following:

*We present DeepSeek-V3, a strong Mixture-of-Experts (MoE) language model with 671B total parameters with 37B activated for each token. To achieve efficient inference and cost-effective training, DeepSeek-V3 adopts Multi-head Latent Attention (MLA) and DeepSeekMoE architectures, which were thoroughly validated in DeepSeek-V2. Furthermore, DeepSeek-V3 pioneers an auxiliary-loss-free strategy for load balancing and sets a multi-token prediction training objective for stronger performance. We pre-train DeepSeek-V3 on 14.8 trillion diverse and high-quality tokens, followed by Supervised Fine-Tuning and Reinforcement Learning stages to fully harness its capabilities. Comprehensive evaluations reveal that DeepSeek-V3 outperforms other open-source models and achieves performance comparable to leading closed-source models. Despite its excellent performance, DeepSeek-V3 requires only 2.788M H800 GPU hours for its full training. In addition, its training process is remarkably stable. Throughout the entire training process, we did not experience any irrecoverable loss spikes or perform any rollbacks. The model checkpoints are available at https://github.com/deepseek-ai/DeepSeek-V3.*

## Limitations and call for contribution!

We are super happy to make this code community-powered, and would love to see how you can help optimize the following:

- current implementation uses the "naive" attention compution (so not really MLA)

- current implementation loops through the experts. This should be replaced. Pointers to use `get_packed_weights` from `intetrations/tensor_parallel`.

- current implementation uses the eleuther formula for ROPE, using the orginal one would be more efficient! (should still follow our API)

- static cache is not supported (this should be just a generation config issue / config shape issues)

### Usage tips

The model uses Multi-head Latent Attention (MLA) and DeepSeekMoE architectures for efficient inference and cost-effective training. It employs an auxiliary-loss-free strategy for load balancing and multi-token prediction training objective. The model can be used for various language tasks after being pre-trained on 14.8 trillion tokens and going through Supervised Fine-Tuning and Reinforcement Learning stages.

You can run the model in `FP8` automatically, using 2 nodes of 8 H100 should be more than enough!

```python

# `run_deepseek_v1.py`

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

torch.manual_seed(30)

tokenizer = AutoTokenizer.from_pretrained("deepseek-r1")

chat = [

{"role": "user", "content": "Hello, how are you?"},

{"role": "assistant", "content": "I'm doing great. How can I help you today?"},

{"role": "user", "content": "I'd like to show off how chat templating works!"},

]

model = AutoModelForCausalLM.from_pretrained("deepseek-r1", device_map="auto", torch_dtype=torch.bfloat16)

inputs = tokenizer.apply_chat_template(chat, tokenize=True, add_generation_prompt=True, return_tensors="pt").to(model.device)

outputs = model.generate(inputs, max_new_tokens=50)

print(tokenizer.batch_decode(outputs))

```

This generated:

``````

<|Assistant|>

Okay, the user wants to demonstrate how chat templating works. Let me break down what that means. Chat templating is about structuring the conversation data, especially for models that need specific input formats. Maybe they're referring to something like how messages are formatted with roles (user, assistant, system) in APIs like OpenAI.

First, I should explain what chat templating is. It's the process of formatting conversation data into a structured format that the model can understand. This usually includes roles and content. For example, user messages, assistant responses, and system messages each have their own role tags.

They might want an example. Let me think of a simple conversation. The user says "Hello, how are you?" and the assistant responds "I'm doing great. How can I help you today?" Then the user follows up with wanting to show off chat templating. So the example should include the history and the new message.

In some frameworks, like Hugging Face's Transformers, chat templates are applied using Jinja2 templates. The template might look something like combining system messages, then looping through user and assistant messages with appropriate tags. For instance, using {% for message in messages %} and assigning roles like <|user|>, <|assistant|>, etc.

I should structure the example with the messages array, showing each role and content. Then apply a hypothetical template to convert that into a formatted string the model uses. Also, mention that different models have different templating requirements, like using special tokens or varying role labels.

Wait, the user mentioned "chat templating" in the context of showing off. Maybe they want a practical example they can present. So providing a code snippet or a structured data example would be helpful. Let me outline a typical messages array and then the templated output.

Also, it's important to note that proper templating ensures the model knows the conversation flow, which is crucial for generating coherent responses. Maybe include a note about why it's important, like maintaining context and role-specific processing.

Let me check if there are any common mistakes or things to avoid. For example, not closing tags properly, or mismatching roles. But maybe that's too detailed unless the user asks. Focus on the positive example first.

Putting it all together, the response should have an example messages array, the applied template, and the final formatted string. Maybe use angle brackets or special tokens as placeholders. Also, mention that this helps in training or fine-tuning models with structured data.

I think that's a solid approach. Let me structure it step by step to make it clear.

Chat templating is a way to structure conversation data (e.g., user/assistant interactions) into a format that language models understand. This is especially important for models trained to handle multi-turn dialogues, where the input must explicitly separate roles (user, assistant, system, etc.) and messages. Let’s break this down with an example!

---

### **Step 1: Raw Conversation History**

Suppose we have this conversation:

- **User**: "Hello, how are you?"

- **Assistant**: "I'm doing great. How can I help you today?"

- **User**: "I'd like to show off how chat templating works!"

---

### **Step 2: Structured Messages**

In frameworks like Hugging Face Transformers or OpenAI, conversations are often formatted as a list of dictionaries with `role` and `content`:

```python

messages = [

{"role": "user", "content": "Hello, how are you?"},

{"role": "assistant", "content": "I'm doing great. How can I help you today?"},

{"role": "user", "content": "I'd like to show off how chat templating works!"},

]

```

---

### **Step 3: Apply a Chat Template**

A **chat template** converts this structured data into a single string formatted for the model. For example, using a Jinja-style template (common in Hugging Face):

```jinja

{% for message in messages %}

{% if message['role'] == 'user' %}

<|user|>{{ message['content'] }}<|end|>

{% elif message['role'] == 'assistant' %}

<|assistant|>{{ message['content'] }}<|end|>

{% endif %}

{% endfor %}

<|assistant|>

```

---

### **Step 4: Final Templated Output**

Applying the template to our `messages` list would produce:

```text

<|user|>Hello, how are you?<|end|>

<|assistant|>I'm doing great. How can I help you today?<|end|>

<|user|>I'd like to show off how chat templating works!<|end|>

<|assistant|>

```

This tells the model:

1. The conversation history (user/assistant turns).

2. The model’s turn to generate a response (`<|assistant|>` at the end).

---

### **Key Notes**:

- **Role Separation**: Tags like `<|user|>` and `<|assistant|>` help the model distinguish speakers.

- **Special Tokens**: Models often use unique tokens (e.g., `<|end|>`) to mark message boundaries.

- **Flexibility**: Templates vary by model (e.g., OpenAI uses `{"role": "user", "content": "..."}` instead of tags).

---

### **Why This Matters**:

- **Consistency**: Ensures the model understands dialogue structure.

- **Context Preservation**: Maintains the flow of multi-turn conversations.

- **Alignment**: Matches the format the model was trained on for better performance.

Want to dive deeper or see a specific framework’s implementation (e.g., OpenAI, Llama, Mistral)? Let me know! 😊<|end▁of▁sentence|>

``````

Use the following to run it

```bash

torchrun --nproc_per_node=8 --nnodes=2 --node_rank=0|1 --rdzv-id an_id --rdzv-backend c10d --rdzv-endpoint master_addr:master_port run_deepseek_r1.py

```

If you have:

```bash

[rank0]: ncclInternalError: Internal check failed.

[rank0]: Last error:

[rank0]: Bootstrap : no socket interface found

```

error, it means NCCL was probably not loaded.

Patch release v4.50.3 (2025-03-28)

# Patch release v4.50.3

Thanks to the vllm team we have a few more bugs that slipped in!

- [generate] beam search -- fix output cropping (#37080) by @gante

- [blip-2] Fix dtype mismatch when keep in fp32 (#37068) by @zucchini-nlp

- Fix PixtralProcessor patch_size when spatial_merge_size is used (#37019)

Patch release v4.50.2 (2025-03-27)

# Patch release v4.50.2

I completely forgot to put these in the previous patch sorry!

Should put the transformers backend in a good spot!

* [Utils] torch version checks optionally accept dev versions (#36847) by @gante

* Fix processor kwargs qwen2 vl (#36890) by @yonigozlan

* Fix Pan and Scan on batched images Gemma3 (#36864) by @yonigozlan

Patch release v4.50.1 (2025-03-25)

# Patch release v4.50.1

There were some very minor bugs with the new hub kernels, and with remote code that we had to fix

- Deprecate #36741 and map Causal to Conditional (#36917) by @zucchini-nlp

- Fix pytorch deform attn path (#36923) by @qubvel

- [chameleon] fix num image token check (#36918) by @zucchini-nlp

- Fix torch version guard at import (#36907) by @zucchini-nlp

Release v4.50.0 (2025-03-21)

# Release v4.50.0

## New Model Additions

### Model-based releases

Starting with version v4.49.0, we have been doing model-based releases, additionally to our traditional, software-based monthly releases. These model-based releases provide a tag from which models may be installed.

Contrarily to our software-releases; these are not pushed to pypi and are kept on our GitHub. Each release has a tag attributed to it, such as:

- `v4.49.0-Gemma-3`

- `v4.49.0-AyaVision`

⚠️ As bugs are identified and fixed on each model, the release tags are updated so that installing from that tag always gives the best experience possible with that model.

Each new model release will always be based on the current state of the main branch at the time of its creation. This ensures that new models start with the latest features and fixes available.

For example, if two models—Gemma-3 and AyaVision—are released from main, and then a fix for gemma3 is merged, it will look something like this:

```

o---- v4.49.0-Gemma-3 (includes AyaVision, plus main fixes)

/ \

---o--o--o--o--o-- (fix for gemma3) --o--o--o main

\

o---- v4.49.0-AyaVision

```

We strive to merge model specific fixes on their respective branches as fast as possible!

### Gemma 3

Gemma 3 is heavily referenced in the following [model-based release](https://github.com/huggingface/transformers/releases/tag/v4.49.0-Gemma-3) and we recommend reading these if you want all the information relative to that model.

The Gemma 3 model was proposed by Google. It is a vision-language model composed by a [SigLIP](https://huggingface.co/docs/transformers/model_doc/siglip) vision encoder and a [Gemma 2](https://huggingface.co/docs/transformers/model_doc/gemma_2) language decoder linked by a multimodal linear projection.

It cuts an image into a fixed number of tokens same way as Siglip if the image does not exceed certain aspect ratio. For images that exceed the given aspect ratio, it crops the image into multiple smaller pacthes and concatenates them with the base image embedding.

One particularity is that the model uses bidirectional attention on all the image tokens. Also, the model interleaves sliding window local attention with full causal attention in the language backbone, where each sixth layer is a full causal attention layer.

* Gemma3 by @RyanMullins in #36658

### Shield Gemma2

ShieldGemma 2 is built on [Gemma 3](https://ai.google.dev/gemma/docs/core/model_card_3), is a 4 billion (4B) parameter model that checks the safety of both synthetic and natural images against key categories to help you build robust datasets and models. With this addition to the Gemma family of models, researchers and developers can now easily minimize the risk of harmful content in their models across key areas of harm as defined below:

- No Sexually Explicit content: The image shall not contain content that depicts explicit or graphic sexual acts (e.g., pornography, erotic nudity, depictions of rape or sexual assault).

- No Dangerous Content: The image shall not contain content that facilitates or encourages activities that could cause real-world harm (e.g., building firearms and explosive devices, promotion of terrorism, instructions for suicide).

- No Violence/Gore content: The image shall not contain content that depicts shocking, sensational, or gratuitous violence (e.g., excessive blood and gore, gratuitous violence against animals, extreme injury or moment of death).

We recommend using ShieldGemma 2 as an input filter to vision language models, or as an output filter of image generation systems. To train a robust image safety model, we curated training datasets of natural and synthetic images and instruction-tuned Gemma 3 to demonstrate strong performance.

* Shieldgemma2 #36678 by @RyanMullins

### Aya Vision

AyaVision is heavily referenced in the following [model-based release](https://github.com/huggingface/transformers/releases/tag/v4.49.0-AyaVision) and we recommend reading these if you want all the information relative to that model.

The Aya Vision 8B and 32B models is a state-of-the-art multilingual multimodal models developed by Cohere For AI. They build on the Aya Expanse recipe to handle both visual and textual information without compromising on the strong multilingual textual performance of the original model.

Aya Vision 8B combines the `Siglip2-so400-384-14` vision encoder with the Cohere CommandR-7B language model further post-trained with the Aya Expanse recipe, creating a powerful vision-language model capable of understanding images and generating text across 23 languages. Whereas, Aya Vision 32B uses Aya Expanse 32B as the language model.

Key features of Aya Vision include:

- Multimodal capabilities in 23 languages

- Strong text-only multilingual capabilities inherited from CommandR-7B post-trained with the Aya Expanse recipe and Aya Expanse 32B

- High-quality visual understanding using the Siglip2-so400-384-14 vision encoder

- Seamless integration of visual and textual information in 23 languages.

* Add aya by @ArthurZucker in #36521

### Mistral 3.1

Mistral 3.1 is heavily referenced in the following [model-based release](https://github.com/huggingface/transformers/releases/tag/v4.49.0-Mistral-3) and we recommend reading these if you want all the information relative to that model.

Building upon Mistral Small 3 (2501), Mistral Small 3.1 (2503) adds state-of-the-art vision understanding and enhances long context capabilities up to 128k tokens without compromising text performance. With 24 billion parameters, this model achieves top-tier capabilities in both text and vision tasks.

It is ideal for:

- Fast-response conversational agents.

- Low-latency function calling.

- Subject matter experts via fine-tuning.

- Local inference for hobbyists and organizations handling sensitive data.

- Programming and math reasoning.

- Long document understanding.

- Visual understanding.

* Add Mistral3 by @Cyrilvallez in #36790

### Smol VLM 2

SmolVLM-2 is heavily referenced in the following [model-based release](https://github.com/huggingface/transformers/releases/tag/v4.49.0-SmolVLM-2) and we recommend reading these if you want all the information relative to that model.

SmolVLM2 is an adaptation of the Idefics3 model with two main differences:

- It uses SmolLM2 for the text model.

- It supports multi-image and video inputs

* SmolVLM2 by @orrzohar in #36126

### SigLIP-2

SigLIP-2 is heavily referenced in the following [model-based release](https://github.com/huggingface/transformers/releases/tag/v4.49.0-SigLIP-2) and we recommend reading these if you want all the information relative to that model.

The SigLIP2 model was proposed in [SigLIP 2: Multilingual Vision-Language Encoders with Improved Semantic Understanding, Localization, and Dense Features](https://huggingface.co/papers/2502.14786) by Michael Tschannen, Alexey Gritsenko, Xiao Wang, Muhammad Ferjad Naeem, Ibrahim Alabdulmohsin,

Nikhil Parthasarathy, Talfan Evans, Lucas Beyer, Ye Xia, Basil Mustafa, Olivier Hénaff, Jeremiah Harmsen,

Andreas Steiner and Xiaohua Zhai.

The model comes in two variants

1) FixRes - model works with fixed resolution images (backward compatible with SigLIP v1)

2) NaFlex - model works with variable image aspect ratios and resolutions (SigLIP2 in `transformers`)

* Add SigLIP 2 by @qubvel in #36323

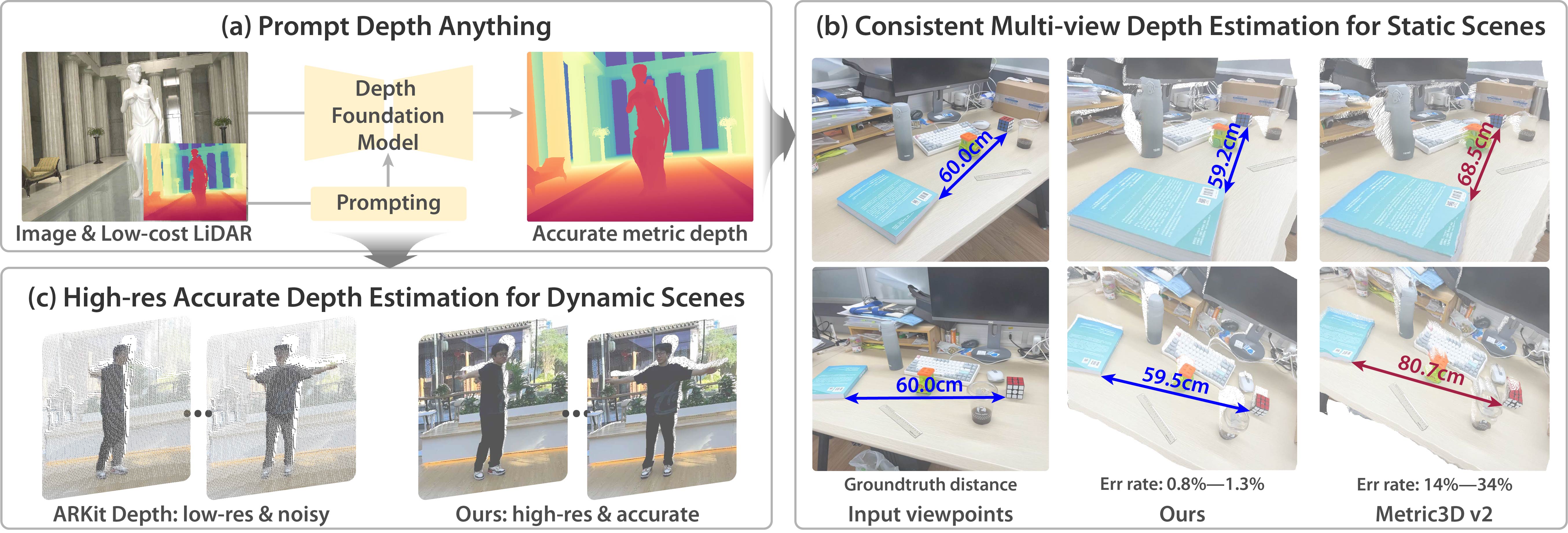

### Prompt Depth Anything

PromptDepthAnything is a high-resolution, accurate metric depth estimation model that leverages prompting, inspired by its success in vision-language (VLMs) and large language models (LLMs). Using iPhone LiDAR as a prompt, the model generates precise depth maps at up to 4K resolution, unlocking the potential of depth foundation models.

* Add Prompt Depth Anything Model by @haotongl in #35401

## New tool: attention visualization

We add a new tool to `transformers` to visualize the attention layout of a given model. It only requires a model ID as input, and will load the relevant tokenizer/model and display what the attention mask looks like. Some examples:

```py

from transformers.utils.attention_visualizer import AttentionMaskVisualizer

visualizer = AttentionMaskVisualizer("meta-llama/Llama-3.2-3B-Instruct")

visualizer("A normal attention mask")

visualizer = AttentionMaskVisualizer("mistralai/Mistral-Small-24B-Instruct-2501")

visualizer("A normal attention mask with a long text to see how it is displayed, and if it is displayed correctly")

visualizer = AttentionMaskVisualizer("google/paligemma2-3b-mix-224")

visualizer("![]() You are an assistant.", suffix = "What is on the image?")

visualizer = AttentionMaskVisualizer("google/gemma-2b")

visualizer("You are an assistant. Make sure you print me") # we should have slidiing on non sliding side by side

visualizer = AttentionMaskVisualizer("google/gemma-3-27b-it")

visualizer("

You are an assistant.", suffix = "What is on the image?")

visualizer = AttentionMaskVisualizer("google/gemma-2b")

visualizer("You are an assistant. Make sure you print me") # we should have slidiing on non sliding side by side

visualizer = AttentionMaskVisualizer("google/gemma-3-27b-it")

visualizer("![]() You are an assistant. Make sure you print me") # we should have slidiing on non sliding side by side

```

* Add attention visualization tool by @ArthurZucker in #36630

## Deprecating transformers.agents in favor of smolagents

We are deprecating `transformers.agents` in favour of the `smolagents` library. Read more about smolagents [here](https://huggingface.co/docs/smolagents/index).

* Deprecate transformers.agents by @aymeric-roucher in #36415

# Quantization

We support adding custom quantization method by using the `@register_quantization_config` and `@register_quantizer` decorator:

```python

@register_quantization_config("custom")

class CustomConfig(QuantizationConfigMixin):

pass

@register_quantizer("custom")

class CustomQuantizer(HfQuantizer):

pass

quantized_model = AutoModelForCausalLM.from_pretrained(

"facebook/opt-350m", quantization_config=CustomConfig(), torch_dtype="auto"

)

```

* Added Support for Custom Quantization by @keetrap in #35915

* Add Example for Custom quantization by @MekkCyber in #36286

AMD is developing its in-house quantizer named [Quark](https://quark.docs.amd.com/latest/) released under MIT license, which supports a broad range of quantization pre-processing, algorithms, dtypes and target hardware. You can now load a model quantized by quark library:

```python

# pip install amd-quark

model_id = "EmbeddedLLM/Llama-3.1-8B-Instruct-w_fp8_per_channel_sym"

model = AutoModelForCausalLM.from_pretrained(model_id)

model = model.to("cuda")

```

* Support loading Quark quantized models in Transformers by @fxmarty-amd and @BowenBao in #36372

Torchao is augmented with `autoquant` support, CPU-quantization, as well as new `AOBaseConfig` object instances for more advanced configuration.

* Add autoquant support for torchao quantizer by @jerryzh168 in #35503

* enable torchao quantization on CPU by @jiqing-feng in #36146

* Add option for ao base configs by @drisspg in #36526

## Tensor Parallelism implementation changes

At loading time, the parallelization is now applied module-by-module, so that no memory overhead is required compared to what the final weight distribution will be!

* TP initialization module-by-module by @Cyrilvallez in #35996

## Generation

This release includes two speed upgrades to `generate`:

1. Assisted generation now works with ANY model as an assistant, even with `do_sample=True`;

```py

from transformers import pipeline

import torch

prompt = "Alice and Bob"

checkpoint = "google/gemma-2-9b"

assistant_checkpoint = "double7/vicuna-68m"

pipe = pipeline(

"text-generation",

model=checkpoint,

assistant_model=assistant_checkpoint,

do_sample=True

)

pipe_output = pipe(prompt, max_new_tokens=50, do_sample=True)

print(pipe_output[0]["generated_text"])

```

2. Beam search was vectorized, and should be significantly faster with a large `num_beams`. The speedup is more visible on smaller models, where `model.forward` doesn't dominate the total run time.

* Universal Speculative Decoding `CandidateGenerator` by @keyboardAnt, @jmamou, and @gauravjain14 in #35029

* [generate] ✨ vectorized beam search ✨ by @gante in #35802

## Documentation

A significant redesign of our documentation has wrapped-up. The goal was to greatly simplify the `transformers` documentation, making it much more easy to navigate. Let us know what you think!

* [docs] Redesign by @stevhliu in #31757

## Notable repo maintenance

The research examples folder that was hosted in `transformers` is no more. We have moved it out of `transformers` and in the following repo: github.com/huggingface/transformers-research-projects/

* Remove research projects by @Rocketknight1 in #36645

We have updated our flex attention support so as to have it be on-par with our Flash Attention 2 support.

* Proper_flex by @ArthurZucker in #36643

### More models support flex attention now thanks to @qubvel

* Refactor Attention implementation for ViT-based models by @qubvel in #36545

### First integration of hub kernels for deformable detr!

- Use deformable_detr kernel from the Hub (#36853) by @danieldk

## Bugfixes and improvements

* [tests] fix `EsmModelIntegrationTest::test_inference_bitsandbytes` by @faaany in #36225

* Fix `LlavaForConditionalGenerationModelTest::test_config` after #36077 by @ydshieh in #36230

* AMD DeepSpeed image additional HIP dependencies by @ivarflakstad in #36195

* [generate] remove cache v4.47 deprecations by @gante in #36212

* Add missing atol to torch.testing.assert_close where rtol is specified by @ivarflakstad in #36234

* [tests] remove tf/flax tests in `/generation` by @gante in #36235

* [generate] Fix encoder decoder models attention mask by @eustlb in #36018

* Add compressed tensor in quant dockerfile by @SunMarc in #36239

* [tests] remove `test_export_to_onnx` by @gante in #36241

* Au revoir flaky `test_fast_is_faster_than_slow` by @ydshieh in #36240

* Fix TorchAoConfig not JSON serializable by @andrewor14 in #36206

* Remove flakiness in VLMs by @zucchini-nlp in #36242

* feat: add support for tensor parallel training workflow with accelerate by @kmehant in #34194

* Fix XGLM loss computation (PyTorch and TensorFlow) by @damianoamatruda in #35878

* GitModelIntegrationTest - flatten the expected slice tensor by @ivarflakstad in #36260

* Added Support for Custom Quantization by @keetrap in #35915

* Qwen2VL fix cos,sin dtypes to float when used with deepspeed by @ArdalanM in #36188

* Uniformize LlavaNextVideoProcessor kwargs by @yonigozlan in #35613

* Add support for post-processing kwargs in image-text-to-text pipeline by @yonigozlan in #35374

* Add dithering to the `Speech2TextFeatureExtractor` API. by @KarelVesely84 in #34638

* [tests] remove `pt_tf` equivalence tests by @gante in #36253

* TP initialization module-by-module by @Cyrilvallez in #35996

* [tests] deflake dither test by @gante in #36284

* [tests] remove flax-pt equivalence and cross tests by @gante in #36283

* [tests] make `test_from_pretrained_low_cpu_mem_usage_equal` less flaky by @gante in #36255

* Add Example for Custom quantization by @MekkCyber in #36286

* docs: Update README_zh-hans.md by @hyjbrave in #36269

* Fix callback handler reference by @SunMarc in #36250

* Make cache traceable by @IlyasMoutawwakil in #35873

* Fix broken CI on release branch due to missing conversion files by @ydshieh in #36275

* Ignore conversion files in test fetcher by @ydshieh in #36251

* SmolVLM2 by @orrzohar in #36126

* Fix typo in Pixtral example by @12v in #36302

* fix: prevent second save in the end of training if last step was saved already by @NosimusAI in #36219

* [smolvlm] make CI green by @gante in #36306

* Fix default attention mask of generate in MoshiForConditionalGeneration by @cyan-channel-io in #36171

* VLMs: even more clean-up by @zucchini-nlp in #36249

* Add SigLIP 2 by @qubvel in #36323

* [CI] Check test if the `GenerationTesterMixin` inheritance is correct 🐛 🔫 by @gante in #36180

* [tests] make quanto tests device-agnostic by @faaany in #36328

* Uses Collection in transformers.image_transforms.normalize by @CalOmnie in #36301

* Fix exploitable regexes in Nougat and GPTSan/GPTJNeoXJapanese by @Rocketknight1 in #36121

* [tests] enable bnb tests on xpu by @faaany in #36233

* Improve model loading for compressed tensor models by @rahul-tuli in #36152

* Change slack channel for mi250 CI to amd-hf-ci by @ivarflakstad in #36346

* Add autoquant support for torchao quantizer by @jerryzh168 in #35503

* Update amd pytorch index to match base image by @ivarflakstad in #36347

* fix(type): padding_side type should be Optional[str] by @shenxiangzhuang in #36326

* [Modeling] Reduce runtime when loading missing keys by @kylesayrs in #36312

* notify new model merged to `main` by @ydshieh in #36375

* Update modeling_llava_onevision.py by @yinsong1986 in #36391

* Load models much faster on accelerator devices!! by @Cyrilvallez in #36380

* [modular] Do not track imports in functions by @Cyrilvallez in #36279

* Fix `is_causal` fail with compile by @Cyrilvallez in #36374

* enable torchao quantization on CPU by @jiqing-feng in #36146

* Update _get_eval_sampler to reflect Trainer.tokenizer is deprecation self.tokenizer -> self.processing_class by @yukiman76 in #36315

* Fix doc formatting in forward passes & modular by @Cyrilvallez in #36243

* Added handling for length <2 of suppress_tokens for whisper by @andreystarenky in #36336

* addressing the issue #34611 to make FlaxDinov2 compatible with any batch size by @MHRDYN7 in #35138

* tests: revert change of torch_require_multi_gpu to be device agnostic by @dvrogozh in #35721

* [tests] enable autoawq tests on XPU by @faaany in #36327

* fix audio classification pipeline fp16 test on cuda by @jiqing-feng in #36359

* chore: fix function argument descriptions by @threewebcode in #36392

* Fix pytorch integration tests for SAM by @qubvel in #36397

* [CLI] add import guards by @gante in #36376

* Fix convert_to_rgb for SAM ImageProcessor by @MSt-10 in #36369

* Security fix for `benchmark.yml` by @ydshieh in #36402

* Fixed VitDet for non-squre Images by @cjfghk5697 in #35969

* Add retry hf hub decorator by @muellerzr in #35213

* Deprecate transformers.agents by @aymeric-roucher in #36415

* Fixing the docs corresponding to the breaking change in torch 2.6. by @Narsil in #36420

* add recommendations for NPU using flash_attn by @zheliuyu in #36383

* fix: prevent model access error during Optuna hyperparameter tuning by @emapco in #36395

* Universal Speculative Decoding `CandidateGenerator` by @keyboardAnt in #35029

* Fix compressed tensors config by @MekkCyber in #36421

* Update form pretrained to make TP a first class citizen by @ArthurZucker in #36335

* Fix Expected output for compressed-tensors tests by @MekkCyber in #36425

* restrict cache allocator to non quantized model by @SunMarc in #36428

* Change PR to draft when it is (re)opened by @ydshieh in #36417

* Fix permission by @ydshieh in #36443

* Fix another permission by @ydshieh in #36444

* Add `contents: write` by @ydshieh in #36445

* [save_pretrained ] Skip collecting duplicated weight by @wejoncy in #36409

* [generate] `torch.distributed`-compatible `DynamicCache` by @gante in #36373

* Lazy import libraries in `src/transformers/image_utils.py` by @hmellor in #36435

* Fix `hub_retry` by @ydshieh in #36449

* [GroundingDino] Fix grounding dino loss 🚨 by @EduardoPach in #31828

* Fix loading models with mismatched sizes by @qubvel in #36463

* [docs] fix bug in deepspeed config by @faaany in #36081

* Add Got-OCR 2 Fast image processor and refactor slow one by @yonigozlan in #36185

* Fix couples of issues from #36335 by @SunMarc in #36453

* Fix _load_state_dict_into_meta_model with device_map=None by @hlky in #36488

* Fix loading zero3 weights by @muellerzr in #36455

* Check `TRUST_REMOTE_CODE` for `RealmRetriever` for security by @ydshieh in #36511

* Fix kwargs UserWarning in SamImageProcessor by @MSt-10 in #36479

* fix torch_dtype, contiguous, and load_state_dict regression by @SunMarc in #36512

* Fix some typos in docs by @co63oc in #36502

* chore: fix message descriptions in arguments and comments by @threewebcode in #36504

* Fix pipeline+peft interaction by @Rocketknight1 in #36480

* Fix edge case for continue_final_message by @Rocketknight1 in #36404

* [Style] fix E721 warnings by @kashif in #36474

* Remove unused code by @Rocketknight1 in #36459

* [docs] Redesign by @stevhliu in #31757

* Add aya by @ArthurZucker in #36521

* chore: Fix typos in docs and examples by @co63oc in #36524

* Fix bamba tests amd by @ivarflakstad in #36535

* Fix links in quantization doc by @MekkCyber in #36528

* chore: enhance messages in docstrings by @threewebcode in #36525

* guard torch version for uint16 by @SunMarc in #36520

* Fix typos in tests by @co63oc in #36547

* Fix typos . by @zhanluxianshen in #36551

* chore: enhance message descriptions in parameters,comments,logs and docstrings by @threewebcode in #36554

* Delete redundancy if case in model_utils by @zhanluxianshen in #36559

* Modular Conversion --fix_and_overwrite on Windows by @hlky in #36583

* Integrate SwanLab for offline/online experiment tracking and local visualization by @ShaohonChen in #36433

* [bark] fix loading of generation config by @gante in #36587

* [XGLM] tag tests as slow by @gante in #36592

* fix: argument by @ariG23498 in #36558

* Mention UltraScale Playbook 🌌 in docs by @NouamaneTazi in #36589

* avoid errors when the size of `input_ids` passed to `PrefixConstrainedLogitsProcessor` is zero by @HiDolen in #36489

* Export base streamer. by @AndreasAbdi in #36500

* Github action for auto-assigning reviewers by @Rocketknight1 in #35846

* Update chat_extras.md with content correction by @krishkkk in #36599

* Update "who to tag" / "who can review" by @gante in #36394

* Fixed datatype related issues in `DataCollatorForLanguageModeling` by @capemox in #36457

* Fix check for XPU. PyTorch >= 2.6 no longer needs ipex. by @tripzero in #36593

* [`HybridCache`] disable automatic compilation by @gante in #36620

* Fix auto-assign reviewers by @Rocketknight1 in #36631

* chore: fix typos in language models by @threewebcode in #36586

* [docs] Serving LLMs by @stevhliu in #36522

* Refactor some core stuff by @ArthurZucker in #36539

* Fix bugs in mllama image processing by @tjohnson31415 in #36156

* Proper_flex by @ArthurZucker in #36643

* Fix AriaForConditionalGeneration flex attn test by @ivarflakstad in #36604

* Remove remote code warning by @Rocketknight1 in #36285

* Stop warnings from unnecessary torch.tensor() overuse by @Rocketknight1 in #36538

* [docs] Update docs dependency by @stevhliu in #36635

* Remove research projects by @Rocketknight1 in #36645

* Fix gguf docs by @SunMarc in #36601

* fix typos in the docs directory by @threewebcode in #36639

* Gemma3 by @RyanMullins in #36658

* HPU support by @IlyasMoutawwakil in #36424

* fix block mask typing by @ArthurZucker in #36661

* [CI] gemma 3 `make fix-copies` by @gante in #36664

* Fix bnb regression due to empty state dict by @SunMarc in #36663

* [core] Large/full refactor of `from_pretrained` by @Cyrilvallez in #36033

* Don't accidentally mutate the base_model_tp_plan by @Rocketknight1 in #36677

* Fix Failing GPTQ tests by @MekkCyber in #36666

* Remove hardcoded slow image processor class in processors supporting fast ones by @yonigozlan in #36266

* [quants] refactor logic for modules_to_not_convert by @SunMarc in #36672

* Remove differences between init and preprocess kwargs for fast image processors by @yonigozlan in #36186

* Refactor siglip2 fast image processor by @yonigozlan in #36406

* Fix rescale normalize inconsistencies in fast image processors by @yonigozlan in #36388

* [Cache] Don't initialize the cache on `meta` device by @gante in #36543

* Update config.torch_dtype correctly by @SunMarc in #36679

* Fix slicing for 0-dim param by @SunMarc in #36580

* Changing the test model in Quanto kv cache by @MekkCyber in #36670

* fix wandb hp search unable to resume from sweep_id by @bd793fcb in #35883

* Upgrading torch version and cuda version in quantization docker by @MekkCyber in #36264

* Change Qwen2_VL image processors to have init and call accept the same kwargs by @yonigozlan in #36207

* fix type annotation for ALL_ATTENTION_FUNCTIONS by @WineChord in #36690

* Fix dtype for params without tp_plan by @Cyrilvallez in #36681

* chore: fix typos in utils module by @threewebcode in #36668

* [CI] Automatic rerun of certain test failures by @gante in #36694

* Add loading speed test by @Cyrilvallez in #36671

* fix: fsdp sharded state dict wont work for save_only_model knob by @kmehant in #36627

* Handling an exception related to HQQ quantization in modeling by @MekkCyber in #36702

* Add GGUF support to T5-Encoder by @Isotr0py in #36700

* Final CI cleanup by @Rocketknight1 in #36703

* Add support for fast image processors in add-new-model-like CLI by @yonigozlan in #36313

* Gemma3 processor typo by @Kuangdd01 in #36710

* Make the flaky list a little more general by @Rocketknight1 in #36704

* Cleanup the regex used for doc preprocessing by @Rocketknight1 in #36648

* [model loading] don't `gc.collect()` if only 1 shard is used by @gante in #36721

* Fix/best model checkpoint fix by @seanswyi in #35885

* Try working around the processor registration bugs by @Rocketknight1 in #36184

* [tests] Parameterized `test_eager_matches_sdpa_inference` by @gante in #36650

* 🌐 [i18n-KO] Translated codegen.md to Korean by @maximizemaxwell in #36698

* Fix post_init() code duplication by @Cyrilvallez in #36727

* Fix grad accum arbitrary value by @IlyasMoutawwakil in #36691

* [Generation, Gemma 3] When passing a custom `generation_config`, overwrite default values with the model's base `generation_config` by @gante in #36684

* 🚨🚨🚨 Fix sdpa in SAM and refactor relative position embeddings by @geetu040 in #36422

* enable/disable compile for quants methods by @SunMarc in #36519

* fix can_generate by @jiqing-feng in #36570

* Allow ray datasets to be used with trainer by @FredrikNoren in #36699

* fix xpu tests by @jiqing-feng in #36656

* Fix test isolation for clear_import_cache utility by @sambhavnoobcoder in #36345

* Fix `TrainingArguments.torch_empty_cache_steps` post_init check by @pkuderov in #36734

* [MINOR:TYPO] Update hubert.md by @cakiki in #36733

* [CI] remove redundant checks in `test_eager_matches_sdpa_inference` by @gante in #36740

* [docs] Update README by @stevhliu in #36265

* doc: Clarify `is_decoder` usage in PretrainedConfig documentation by @d-kleine in #36724

* fix typos in the tests directory by @threewebcode in #36717

* chore: fix typos in tests directory by @threewebcode in #36785

* Fixing typo in gemma3 image_processor_fast and adding a small test by @Zebz13 in #36776

* Fix gemma3_text tokenizer in mapping by @LysandreJik in #36793

* Add Mistral3 by @Cyrilvallez in #36790

* fix hqq due to recent modeling changes by @SunMarc in #36771

* Update SHA for `tj-actions/changed-files` by @ydshieh in #36795

* Loading optimizations by @Cyrilvallez in #36742

* Fix Mistral3 tests by @yonigozlan in #36797

* Fix casting dtype for qunatization by @SunMarc in #36799

* Fix chameleon's TypeError because inputs_embeds may None by @YenFuLin in #36673

* Support custom dosctrings in modular by @yonigozlan in #36726

* [generate] ✨ vectorized beam search ✨ by @gante in #35802

* Expectations test utils by @ivarflakstad in #36569

* fix "Cannot copy out of meta tensor; no data!" issue for BartForConditionalGeneration model by @yao-matrix in #36572

* Remove `dist": "loadfile"` for `pytest` in CircleCI jobs by @ydshieh in #36811

* Fix Device map for bitsandbytes tests by @MekkCyber in #36800

* [Generation] remove leftover code from end-to-end compilation by @gante in #36685

* Add attention visualization tool by @ArthurZucker in #36630

* Add option for ao base configs by @drisspg in #36526

* enable OffloadedCache on XPU from PyTorch 2.7 by @yao-matrix in #36654

* [gemma 3] multimodal checkpoints + AutoModelForCausalLM by @gante in #36741

* One more fix for reviewer assignment by @Rocketknight1 in #36829

* Support tracable dynamicKVcache by @tugsbayasgalan in #36311

* Add Space to Bitsandbytes doc by @MekkCyber in #36834

* quick fix fast_image_processor register error by @JJJYmmm in #36716

* Update configuration_qwen2.py by @michaelfeil in #36735

* Just import torch AdamW instead by @Rocketknight1 in #36177

* Move the warning to the documentation for DataCollatorWithFlattening by @qgallouedec in #36707

* Fix swanlab global step by @Zeyi-Lin in #36728

* Disable inductor config setter by default by @HDCharles in #36608

* [ForCausalLMLoss] allow users to pass shifted labels by @stas00 in #36607

* fix tiktoken convert to pass AddedToken to Tokenizer by @itazap in #36566

* Saving `Trainer.collator.tokenizer` in when `Trainer.processing_class` is `None` by @innerNULL in #36552

* Pass num_items_in_batch directly to loss computation by @eljandoubi in #36753

* Fix fp16 ONNX export for RT-DETR and RT-DETRv2 by @qubvel in #36460

* Update deprecated Jax calls by @rasmi in #35919

* [qwen2 audio] remove redundant code and update docs by @gante in #36282

* Pass state dict by @phos-phophy in #35234

* [modular] Sort modular skips by @gante in #36304

* [generate] clarify docstrings: when to inherit `GenerationMixin` by @gante in #36605

* Update min safetensors bis by @SunMarc in #36823

* Fix import for torch 2.0, 2.1 - guard typehint for "device_mesh" by @qubvel in #36768

* Gemma 3: Adding explicit GenerationConfig and refactoring conversion … by @RyanMullins in #36833

* Fix: remove the redundant snippet of _whole_word_mask by @HuangBugWei in #36759

* Shieldgemma2 by @RyanMullins in #36678

* Fix ONNX export for sequence classification head by @echarlaix in #36332

* Fix hqq skipped modules and dynamic quant by @mobicham in #36821

* Use pyupgrade --py39-plus to improve code by @cyyever in #36843

* Support loading Quark quantized models in Transformers by @fxmarty-amd in #36372

* DeepSpeed tensor parallel+ZeRO by @inkcherry in #36825

* Refactor Attention implementation for ViT-based models by @qubvel in #36545

* Add Prompt Depth Anything Model by @haotongl in #35401

* Add model visual debugger by @molbap in #36798

* [torchao] revert to get_apply_tensor_subclass by @SunMarc in #36849

* Gemma3: fix test by @zucchini-nlp in #36820

* [CI] fix update metadata job by @gante in #36850

* Add support for seed in `DataCollatorForLanguageModeling` by @capemox in #36497

* Refactor Aya Vision with modular by @yonigozlan in #36688

* Mllama: raise better error by @zucchini-nlp in #35934

* [CI] doc builder without custom image by @gante in #36862

* FIX FSDP plugin update for QLoRA by @BenjaminBossan in #36720

* Remove call to `.item` in `get_batch_samples` by @regisss in #36861

* chore: fix typos in the tests directory by @threewebcode in #36813

* Make ViTPooler configurable by @sebbaur in #36517

* Revert "Update deprecated Jax calls by @ArthurZucker in #35919)"

* [generate] model defaults being inherited only happens for newer models by @gante in #36881

* :red_circle: :red_circle: :red_circle: supersede paligemma forward to shift pos id indexing by @molbap in #36859

* Gemma 3 tests expect greedy decoding by @molbap in #36882

* Use `deformable_detr` kernel from the Hub by @danieldk in #36853

* Minor Gemma 3 fixes by @molbap in #36884

* Fix: dtype cannot be str by @zucchini-nlp in #36262

## Significant community contributions

The following contributors have made significant changes to the library over the last release:

* @IlyasMoutawwakil

* Make cache traceable (#35873)

* HPU support (#36424)

* Fix grad accum arbitrary value (#36691)

* @orrzohar

* SmolVLM2 (#36126)

* @threewebcode

* chore: fix function argument descriptions (#36392)

* chore: fix message descriptions in arguments and comments (#36504)

* chore: enhance messages in docstrings (#36525)

* chore: enhance message descriptions in parameters,comments,logs and docstrings (#36554)

* chore: fix typos in language models (#36586)

* fix typos in the docs directory (#36639)

* chore: fix typos in utils module (#36668)

* fix typos in the tests directory (#36717)

* chore: fix typos in tests directory (#36785)

* chore: fix typos in the tests directory (#36813)

* @aymeric-roucher

* Deprecate transformers.agents (#36415)

* @keyboardAnt

* Universal Speculative Decoding `CandidateGenerator` (#35029)

* @EduardoPach

* [GroundingDino] Fix grounding dino loss 🚨 (#31828)

* @co63oc

* Fix some typos in docs (#36502)

* chore: Fix typos in docs and examples (#36524)

* Fix typos in tests (#36547)

* @RyanMullins

* Gemma3 (#36658)

* Gemma 3: Adding explicit GenerationConfig and refactoring conversion … (#36833)

* Shieldgemma2 (#36678)

* @cyyever

* Use pyupgrade --py39-plus to improve code (#36843)

* @haotongl

* Add Prompt Depth Anything Model (#35401)

* @danieldk

* Use `deformable_detr` kernel from the Hub (#36853)

You are an assistant. Make sure you print me") # we should have slidiing on non sliding side by side

```

* Add attention visualization tool by @ArthurZucker in #36630

## Deprecating transformers.agents in favor of smolagents

We are deprecating `transformers.agents` in favour of the `smolagents` library. Read more about smolagents [here](https://huggingface.co/docs/smolagents/index).

* Deprecate transformers.agents by @aymeric-roucher in #36415

# Quantization

We support adding custom quantization method by using the `@register_quantization_config` and `@register_quantizer` decorator:

```python

@register_quantization_config("custom")

class CustomConfig(QuantizationConfigMixin):

pass

@register_quantizer("custom")

class CustomQuantizer(HfQuantizer):

pass

quantized_model = AutoModelForCausalLM.from_pretrained(

"facebook/opt-350m", quantization_config=CustomConfig(), torch_dtype="auto"

)

```

* Added Support for Custom Quantization by @keetrap in #35915

* Add Example for Custom quantization by @MekkCyber in #36286

AMD is developing its in-house quantizer named [Quark](https://quark.docs.amd.com/latest/) released under MIT license, which supports a broad range of quantization pre-processing, algorithms, dtypes and target hardware. You can now load a model quantized by quark library:

```python

# pip install amd-quark

model_id = "EmbeddedLLM/Llama-3.1-8B-Instruct-w_fp8_per_channel_sym"

model = AutoModelForCausalLM.from_pretrained(model_id)

model = model.to("cuda")

```

* Support loading Quark quantized models in Transformers by @fxmarty-amd and @BowenBao in #36372

Torchao is augmented with `autoquant` support, CPU-quantization, as well as new `AOBaseConfig` object instances for more advanced configuration.

* Add autoquant support for torchao quantizer by @jerryzh168 in #35503

* enable torchao quantization on CPU by @jiqing-feng in #36146

* Add option for ao base configs by @drisspg in #36526

## Tensor Parallelism implementation changes

At loading time, the parallelization is now applied module-by-module, so that no memory overhead is required compared to what the final weight distribution will be!

* TP initialization module-by-module by @Cyrilvallez in #35996

## Generation

This release includes two speed upgrades to `generate`:

1. Assisted generation now works with ANY model as an assistant, even with `do_sample=True`;

```py

from transformers import pipeline

import torch

prompt = "Alice and Bob"

checkpoint = "google/gemma-2-9b"

assistant_checkpoint = "double7/vicuna-68m"

pipe = pipeline(

"text-generation",

model=checkpoint,

assistant_model=assistant_checkpoint,

do_sample=True

)

pipe_output = pipe(prompt, max_new_tokens=50, do_sample=True)

print(pipe_output[0]["generated_text"])

```

2. Beam search was vectorized, and should be significantly faster with a large `num_beams`. The speedup is more visible on smaller models, where `model.forward` doesn't dominate the total run time.

* Universal Speculative Decoding `CandidateGenerator` by @keyboardAnt, @jmamou, and @gauravjain14 in #35029

* [generate] ✨ vectorized beam search ✨ by @gante in #35802

## Documentation

A significant redesign of our documentation has wrapped-up. The goal was to greatly simplify the `transformers` documentation, making it much more easy to navigate. Let us know what you think!

* [docs] Redesign by @stevhliu in #31757

## Notable repo maintenance

The research examples folder that was hosted in `transformers` is no more. We have moved it out of `transformers` and in the following repo: github.com/huggingface/transformers-research-projects/

* Remove research projects by @Rocketknight1 in #36645

We have updated our flex attention support so as to have it be on-par with our Flash Attention 2 support.

* Proper_flex by @ArthurZucker in #36643

### More models support flex attention now thanks to @qubvel

* Refactor Attention implementation for ViT-based models by @qubvel in #36545

### First integration of hub kernels for deformable detr!

- Use deformable_detr kernel from the Hub (#36853) by @danieldk

## Bugfixes and improvements

* [tests] fix `EsmModelIntegrationTest::test_inference_bitsandbytes` by @faaany in #36225

* Fix `LlavaForConditionalGenerationModelTest::test_config` after #36077 by @ydshieh in #36230

* AMD DeepSpeed image additional HIP dependencies by @ivarflakstad in #36195

* [generate] remove cache v4.47 deprecations by @gante in #36212

* Add missing atol to torch.testing.assert_close where rtol is specified by @ivarflakstad in #36234

* [tests] remove tf/flax tests in `/generation` by @gante in #36235

* [generate] Fix encoder decoder models attention mask by @eustlb in #36018

* Add compressed tensor in quant dockerfile by @SunMarc in #36239

* [tests] remove `test_export_to_onnx` by @gante in #36241

* Au revoir flaky `test_fast_is_faster_than_slow` by @ydshieh in #36240

* Fix TorchAoConfig not JSON serializable by @andrewor14 in #36206

* Remove flakiness in VLMs by @zucchini-nlp in #36242

* feat: add support for tensor parallel training workflow with accelerate by @kmehant in #34194

* Fix XGLM loss computation (PyTorch and TensorFlow) by @damianoamatruda in #35878

* GitModelIntegrationTest - flatten the expected slice tensor by @ivarflakstad in #36260

* Added Support for Custom Quantization by @keetrap in #35915

* Qwen2VL fix cos,sin dtypes to float when used with deepspeed by @ArdalanM in #36188

* Uniformize LlavaNextVideoProcessor kwargs by @yonigozlan in #35613

* Add support for post-processing kwargs in image-text-to-text pipeline by @yonigozlan in #35374

* Add dithering to the `Speech2TextFeatureExtractor` API. by @KarelVesely84 in #34638

* [tests] remove `pt_tf` equivalence tests by @gante in #36253

* TP initialization module-by-module by @Cyrilvallez in #35996

* [tests] deflake dither test by @gante in #36284

* [tests] remove flax-pt equivalence and cross tests by @gante in #36283

* [tests] make `test_from_pretrained_low_cpu_mem_usage_equal` less flaky by @gante in #36255

* Add Example for Custom quantization by @MekkCyber in #36286

* docs: Update README_zh-hans.md by @hyjbrave in #36269

* Fix callback handler reference by @SunMarc in #36250

* Make cache traceable by @IlyasMoutawwakil in #35873

* Fix broken CI on release branch due to missing conversion files by @ydshieh in #36275

* Ignore conversion files in test fetcher by @ydshieh in #36251

* SmolVLM2 by @orrzohar in #36126

* Fix typo in Pixtral example by @12v in #36302

* fix: prevent second save in the end of training if last step was saved already by @NosimusAI in #36219

* [smolvlm] make CI green by @gante in #36306

* Fix default attention mask of generate in MoshiForConditionalGeneration by @cyan-channel-io in #36171

* VLMs: even more clean-up by @zucchini-nlp in #36249

* Add SigLIP 2 by @qubvel in #36323

* [CI] Check test if the `GenerationTesterMixin` inheritance is correct 🐛 🔫 by @gante in #36180

* [tests] make quanto tests device-agnostic by @faaany in #36328

* Uses Collection in transformers.image_transforms.normalize by @CalOmnie in #36301

* Fix exploitable regexes in Nougat and GPTSan/GPTJNeoXJapanese by @Rocketknight1 in #36121

* [tests] enable bnb tests on xpu by @faaany in #36233

* Improve model loading for compressed tensor models by @rahul-tuli in #36152

* Change slack channel for mi250 CI to amd-hf-ci by @ivarflakstad in #36346

* Add autoquant support for torchao quantizer by @jerryzh168 in #35503

* Update amd pytorch index to match base image by @ivarflakstad in #36347

* fix(type): padding_side type should be Optional[str] by @shenxiangzhuang in #36326

* [Modeling] Reduce runtime when loading missing keys by @kylesayrs in #36312

* notify new model merged to `main` by @ydshieh in #36375

* Update modeling_llava_onevision.py by @yinsong1986 in #36391

* Load models much faster on accelerator devices!! by @Cyrilvallez in #36380

* [modular] Do not track imports in functions by @Cyrilvallez in #36279

* Fix `is_causal` fail with compile by @Cyrilvallez in #36374

* enable torchao quantization on CPU by @jiqing-feng in #36146

* Update _get_eval_sampler to reflect Trainer.tokenizer is deprecation self.tokenizer -> self.processing_class by @yukiman76 in #36315

* Fix doc formatting in forward passes & modular by @Cyrilvallez in #36243

* Added handling for length <2 of suppress_tokens for whisper by @andreystarenky in #36336

* addressing the issue #34611 to make FlaxDinov2 compatible with any batch size by @MHRDYN7 in #35138

* tests: revert change of torch_require_multi_gpu to be device agnostic by @dvrogozh in #35721

* [tests] enable autoawq tests on XPU by @faaany in #36327

* fix audio classification pipeline fp16 test on cuda by @jiqing-feng in #36359

* chore: fix function argument descriptions by @threewebcode in #36392

* Fix pytorch integration tests for SAM by @qubvel in #36397

* [CLI] add import guards by @gante in #36376

* Fix convert_to_rgb for SAM ImageProcessor by @MSt-10 in #36369

* Security fix for `benchmark.yml` by @ydshieh in #36402

* Fixed VitDet for non-squre Images by @cjfghk5697 in #35969

* Add retry hf hub decorator by @muellerzr in #35213

* Deprecate transformers.agents by @aymeric-roucher in #36415

* Fixing the docs corresponding to the breaking change in torch 2.6. by @Narsil in #36420

* add recommendations for NPU using flash_attn by @zheliuyu in #36383

* fix: prevent model access error during Optuna hyperparameter tuning by @emapco in #36395

* Universal Speculative Decoding `CandidateGenerator` by @keyboardAnt in #35029

* Fix compressed tensors config by @MekkCyber in #36421

* Update form pretrained to make TP a first class citizen by @ArthurZucker in #36335

* Fix Expected output for compressed-tensors tests by @MekkCyber in #36425

* restrict cache allocator to non quantized model by @SunMarc in #36428

* Change PR to draft when it is (re)opened by @ydshieh in #36417

* Fix permission by @ydshieh in #36443

* Fix another permission by @ydshieh in #36444

* Add `contents: write` by @ydshieh in #36445

* [save_pretrained ] Skip collecting duplicated weight by @wejoncy in #36409

* [generate] `torch.distributed`-compatible `DynamicCache` by @gante in #36373

* Lazy import libraries in `src/transformers/image_utils.py` by @hmellor in #36435

* Fix `hub_retry` by @ydshieh in #36449

* [GroundingDino] Fix grounding dino loss 🚨 by @EduardoPach in #31828

* Fix loading models with mismatched sizes by @qubvel in #36463

* [docs] fix bug in deepspeed config by @faaany in #36081

* Add Got-OCR 2 Fast image processor and refactor slow one by @yonigozlan in #36185

* Fix couples of issues from #36335 by @SunMarc in #36453

* Fix _load_state_dict_into_meta_model with device_map=None by @hlky in #36488

* Fix loading zero3 weights by @muellerzr in #36455

* Check `TRUST_REMOTE_CODE` for `RealmRetriever` for security by @ydshieh in #36511

* Fix kwargs UserWarning in SamImageProcessor by @MSt-10 in #36479

* fix torch_dtype, contiguous, and load_state_dict regression by @SunMarc in #36512

* Fix some typos in docs by @co63oc in #36502

* chore: fix message descriptions in arguments and comments by @threewebcode in #36504

* Fix pipeline+peft interaction by @Rocketknight1 in #36480

* Fix edge case for continue_final_message by @Rocketknight1 in #36404

* [Style] fix E721 warnings by @kashif in #36474

* Remove unused code by @Rocketknight1 in #36459

* [docs] Redesign by @stevhliu in #31757

* Add aya by @ArthurZucker in #36521

* chore: Fix typos in docs and examples by @co63oc in #36524

* Fix bamba tests amd by @ivarflakstad in #36535

* Fix links in quantization doc by @MekkCyber in #36528

* chore: enhance messages in docstrings by @threewebcode in #36525

* guard torch version for uint16 by @SunMarc in #36520

* Fix typos in tests by @co63oc in #36547

* Fix typos . by @zhanluxianshen in #36551

* chore: enhance message descriptions in parameters,comments,logs and docstrings by @threewebcode in #36554

* Delete redundancy if case in model_utils by @zhanluxianshen in #36559

* Modular Conversion --fix_and_overwrite on Windows by @hlky in #36583

* Integrate SwanLab for offline/online experiment tracking and local visualization by @ShaohonChen in #36433

* [bark] fix loading of generation config by @gante in #36587

* [XGLM] tag tests as slow by @gante in #36592

* fix: argument by @ariG23498 in #36558

* Mention UltraScale Playbook 🌌 in docs by @NouamaneTazi in #36589

* avoid errors when the size of `input_ids` passed to `PrefixConstrainedLogitsProcessor` is zero by @HiDolen in #36489

* Export base streamer. by @AndreasAbdi in #36500

* Github action for auto-assigning reviewers by @Rocketknight1 in #35846

* Update chat_extras.md with content correction by @krishkkk in #36599

* Update "who to tag" / "who can review" by @gante in #36394

* Fixed datatype related issues in `DataCollatorForLanguageModeling` by @capemox in #36457

* Fix check for XPU. PyTorch >= 2.6 no longer needs ipex. by @tripzero in #36593

* [`HybridCache`] disable automatic compilation by @gante in #36620

* Fix auto-assign reviewers by @Rocketknight1 in #36631

* chore: fix typos in language models by @threewebcode in #36586

* [docs] Serving LLMs by @stevhliu in #36522

* Refactor some core stuff by @ArthurZucker in #36539

* Fix bugs in mllama image processing by @tjohnson31415 in #36156

* Proper_flex by @ArthurZucker in #36643

* Fix AriaForConditionalGeneration flex attn test by @ivarflakstad in #36604

* Remove remote code warning by @Rocketknight1 in #36285

* Stop warnings from unnecessary torch.tensor() overuse by @Rocketknight1 in #36538

* [docs] Update docs dependency by @stevhliu in #36635

* Remove research projects by @Rocketknight1 in #36645

* Fix gguf docs by @SunMarc in #36601

* fix typos in the docs directory by @threewebcode in #36639

* Gemma3 by @RyanMullins in #36658

* HPU support by @IlyasMoutawwakil in #36424

* fix block mask typing by @ArthurZucker in #36661

* [CI] gemma 3 `make fix-copies` by @gante in #36664

* Fix bnb regression due to empty state dict by @SunMarc in #36663

* [core] Large/full refactor of `from_pretrained` by @Cyrilvallez in #36033

* Don't accidentally mutate the base_model_tp_plan by @Rocketknight1 in #36677

* Fix Failing GPTQ tests by @MekkCyber in #36666

* Remove hardcoded slow image processor class in processors supporting fast ones by @yonigozlan in #36266

* [quants] refactor logic for modules_to_not_convert by @SunMarc in #36672

* Remove differences between init and preprocess kwargs for fast image processors by @yonigozlan in #36186

* Refactor siglip2 fast image processor by @yonigozlan in #36406

* Fix rescale normalize inconsistencies in fast image processors by @yonigozlan in #36388

* [Cache] Don't initialize the cache on `meta` device by @gante in #36543

* Update config.torch_dtype correctly by @SunMarc in #36679

* Fix slicing for 0-dim param by @SunMarc in #36580

* Changing the test model in Quanto kv cache by @MekkCyber in #36670

* fix wandb hp search unable to resume from sweep_id by @bd793fcb in #35883

* Upgrading torch version and cuda version in quantization docker by @MekkCyber in #36264

* Change Qwen2_VL image processors to have init and call accept the same kwargs by @yonigozlan in #36207

* fix type annotation for ALL_ATTENTION_FUNCTIONS by @WineChord in #36690

* Fix dtype for params without tp_plan by @Cyrilvallez in #36681

* chore: fix typos in utils module by @threewebcode in #36668

* [CI] Automatic rerun of certain test failures by @gante in #36694

* Add loading speed test by @Cyrilvallez in #36671

* fix: fsdp sharded state dict wont work for save_only_model knob by @kmehant in #36627

* Handling an exception related to HQQ quantization in modeling by @MekkCyber in #36702

* Add GGUF support to T5-Encoder by @Isotr0py in #36700

* Final CI cleanup by @Rocketknight1 in #36703

* Add support for fast image processors in add-new-model-like CLI by @yonigozlan in #36313

* Gemma3 processor typo by @Kuangdd01 in #36710

* Make the flaky list a little more general by @Rocketknight1 in #36704

* Cleanup the regex used for doc preprocessing by @Rocketknight1 in #36648

* [model loading] don't `gc.collect()` if only 1 shard is used by @gante in #36721

* Fix/best model checkpoint fix by @seanswyi in #35885

* Try working around the processor registration bugs by @Rocketknight1 in #36184

* [tests] Parameterized `test_eager_matches_sdpa_inference` by @gante in #36650

* 🌐 [i18n-KO] Translated codegen.md to Korean by @maximizemaxwell in #36698

* Fix post_init() code duplication by @Cyrilvallez in #36727

* Fix grad accum arbitrary value by @IlyasMoutawwakil in #36691

* [Generation, Gemma 3] When passing a custom `generation_config`, overwrite default values with the model's base `generation_config` by @gante in #36684

* 🚨🚨🚨 Fix sdpa in SAM and refactor relative position embeddings by @geetu040 in #36422

* enable/disable compile for quants methods by @SunMarc in #36519

* fix can_generate by @jiqing-feng in #36570

* Allow ray datasets to be used with trainer by @FredrikNoren in #36699

* fix xpu tests by @jiqing-feng in #36656

* Fix test isolation for clear_import_cache utility by @sambhavnoobcoder in #36345

* Fix `TrainingArguments.torch_empty_cache_steps` post_init check by @pkuderov in #36734

* [MINOR:TYPO] Update hubert.md by @cakiki in #36733

* [CI] remove redundant checks in `test_eager_matches_sdpa_inference` by @gante in #36740

* [docs] Update README by @stevhliu in #36265

* doc: Clarify `is_decoder` usage in PretrainedConfig documentation by @d-kleine in #36724

* fix typos in the tests directory by @threewebcode in #36717

* chore: fix typos in tests directory by @threewebcode in #36785

* Fixing typo in gemma3 image_processor_fast and adding a small test by @Zebz13 in #36776

* Fix gemma3_text tokenizer in mapping by @LysandreJik in #36793

* Add Mistral3 by @Cyrilvallez in #36790

* fix hqq due to recent modeling changes by @SunMarc in #36771

* Update SHA for `tj-actions/changed-files` by @ydshieh in #36795

* Loading optimizations by @Cyrilvallez in #36742

* Fix Mistral3 tests by @yonigozlan in #36797

* Fix casting dtype for qunatization by @SunMarc in #36799

* Fix chameleon's TypeError because inputs_embeds may None by @YenFuLin in #36673

* Support custom dosctrings in modular by @yonigozlan in #36726

* [generate] ✨ vectorized beam search ✨ by @gante in #35802

* Expectations test utils by @ivarflakstad in #36569

* fix "Cannot copy out of meta tensor; no data!" issue for BartForConditionalGeneration model by @yao-matrix in #36572

* Remove `dist": "loadfile"` for `pytest` in CircleCI jobs by @ydshieh in #36811

* Fix Device map for bitsandbytes tests by @MekkCyber in #36800

* [Generation] remove leftover code from end-to-end compilation by @gante in #36685

* Add attention visualization tool by @ArthurZucker in #36630

* Add option for ao base configs by @drisspg in #36526

* enable OffloadedCache on XPU from PyTorch 2.7 by @yao-matrix in #36654

* [gemma 3] multimodal checkpoints + AutoModelForCausalLM by @gante in #36741

* One more fix for reviewer assignment by @Rocketknight1 in #36829

* Support tracable dynamicKVcache by @tugsbayasgalan in #36311

* Add Space to Bitsandbytes doc by @MekkCyber in #36834

* quick fix fast_image_processor register error by @JJJYmmm in #36716

* Update configuration_qwen2.py by @michaelfeil in #36735

* Just import torch AdamW instead by @Rocketknight1 in #36177

* Move the warning to the documentation for DataCollatorWithFlattening by @qgallouedec in #36707

* Fix swanlab global step by @Zeyi-Lin in #36728

* Disable inductor config setter by default by @HDCharles in #36608

* [ForCausalLMLoss] allow users to pass shifted labels by @stas00 in #36607

* fix tiktoken convert to pass AddedToken to Tokenizer by @itazap in #36566

* Saving `Trainer.collator.tokenizer` in when `Trainer.processing_class` is `None` by @innerNULL in #36552

* Pass num_items_in_batch directly to loss computation by @eljandoubi in #36753

* Fix fp16 ONNX export for RT-DETR and RT-DETRv2 by @qubvel in #36460

* Update deprecated Jax calls by @rasmi in #35919

* [qwen2 audio] remove redundant code and update docs by @gante in #36282

* Pass state dict by @phos-phophy in #35234

* [modular] Sort modular skips by @gante in #36304

* [generate] clarify docstrings: when to inherit `GenerationMixin` by @gante in #36605

* Update min safetensors bis by @SunMarc in #36823

* Fix import for torch 2.0, 2.1 - guard typehint for "device_mesh" by @qubvel in #36768

* Gemma 3: Adding explicit GenerationConfig and refactoring conversion … by @RyanMullins in #36833

* Fix: remove the redundant snippet of _whole_word_mask by @HuangBugWei in #36759

* Shieldgemma2 by @RyanMullins in #36678

* Fix ONNX export for sequence classification head by @echarlaix in #36332

* Fix hqq skipped modules and dynamic quant by @mobicham in #36821

* Use pyupgrade --py39-plus to improve code by @cyyever in #36843

* Support loading Quark quantized models in Transformers by @fxmarty-amd in #36372

* DeepSpeed tensor parallel+ZeRO by @inkcherry in #36825

* Refactor Attention implementation for ViT-based models by @qubvel in #36545

* Add Prompt Depth Anything Model by @haotongl in #35401

* Add model visual debugger by @molbap in #36798

* [torchao] revert to get_apply_tensor_subclass by @SunMarc in #36849

* Gemma3: fix test by @zucchini-nlp in #36820

* [CI] fix update metadata job by @gante in #36850

* Add support for seed in `DataCollatorForLanguageModeling` by @capemox in #36497

* Refactor Aya Vision with modular by @yonigozlan in #36688

* Mllama: raise better error by @zucchini-nlp in #35934

* [CI] doc builder without custom image by @gante in #36862

* FIX FSDP plugin update for QLoRA by @BenjaminBossan in #36720

* Remove call to `.item` in `get_batch_samples` by @regisss in #36861

* chore: fix typos in the tests directory by @threewebcode in #36813

* Make ViTPooler configurable by @sebbaur in #36517

* Revert "Update deprecated Jax calls by @ArthurZucker in #35919)"

* [generate] model defaults being inherited only happens for newer models by @gante in #36881

* :red_circle: :red_circle: :red_circle: supersede paligemma forward to shift pos id indexing by @molbap in #36859

* Gemma 3 tests expect greedy decoding by @molbap in #36882

* Use `deformable_detr` kernel from the Hub by @danieldk in #36853

* Minor Gemma 3 fixes by @molbap in #36884

* Fix: dtype cannot be str by @zucchini-nlp in #36262

## Significant community contributions

The following contributors have made significant changes to the library over the last release:

* @IlyasMoutawwakil

* Make cache traceable (#35873)

* HPU support (#36424)

* Fix grad accum arbitrary value (#36691)

* @orrzohar

* SmolVLM2 (#36126)

* @threewebcode

* chore: fix function argument descriptions (#36392)

* chore: fix message descriptions in arguments and comments (#36504)

* chore: enhance messages in docstrings (#36525)

* chore: enhance message descriptions in parameters,comments,logs and docstrings (#36554)

* chore: fix typos in language models (#36586)

* fix typos in the docs directory (#36639)

* chore: fix typos in utils module (#36668)

* fix typos in the tests directory (#36717)

* chore: fix typos in tests directory (#36785)

* chore: fix typos in the tests directory (#36813)

* @aymeric-roucher

* Deprecate transformers.agents (#36415)

* @keyboardAnt

* Universal Speculative Decoding `CandidateGenerator` (#35029)

* @EduardoPach

* [GroundingDino] Fix grounding dino loss 🚨 (#31828)

* @co63oc

* Fix some typos in docs (#36502)

* chore: Fix typos in docs and examples (#36524)

* Fix typos in tests (#36547)

* @RyanMullins

* Gemma3 (#36658)

* Gemma 3: Adding explicit GenerationConfig and refactoring conversion … (#36833)

* Shieldgemma2 (#36678)

* @cyyever

* Use pyupgrade --py39-plus to improve code (#36843)

* @haotongl

* Add Prompt Depth Anything Model (#35401)

* @danieldk

* Use `deformable_detr` kernel from the Hub (#36853)

Mistral 3 (Based on v4.49.0) (2025-03-18)

A new model is added to transformers: Mistral 3.

It is added on top of the v4.49.0 release, and can be installed from the following tag: v4.49.0-Mistral-3.

In order to install this version, please install with the following command:

```

pip install git+https://github.com/huggingface/transformers@v4.49.0-Mistral-3

```

If fixes are needed, they will be applied to this release; this installation may therefore be considered as stable and improving.

# Mistral 3

The model is detailed in the following [blog post](https://mistral.ai/news/mistral-small-3-1).

The models are available on the Hub with the following tag: [`mistral3`](https://huggingface.co/models?other=mistral3)

## Overview

Building upon Mistral Small 3 (2501), Mistral Small 3.1 (2503) adds state-of-the-art vision understanding and enhances long context capabilities up to 128k tokens without compromising text performance. With 24 billion parameters, this model achieves top-tier capabilities in both text and vision tasks.

It is ideal for:

- Fast-response conversational agents.

- Low-latency function calling.

- Subject matter experts via fine-tuning.

- Local inference for hobbyists and organizations handling sensitive data.

- Programming and math reasoning.

- Long document understanding.

- Visual understanding.

This model was contributed by [cyrilvallez](https://huggingface.co/cyrilvallez) and [yonigozlan](https://huggingface.co/yonigozlan).

The original code can be found [here](https://github.com/vllm-project/vllm/blob/main/vllm/model_executor/models/pixtral.py) and [here](https://github.com/mistralai/mistral-common).

## Usage example

### Inference with Pipeline

Here is how you can use the `image-text-to-text` pipeline to perform inference with the `Mistral3` models in just a few lines of code:

```python

>>> from transformers import pipeline

>>> messages = [

... {

... "role": "user",

... "content": [

... {

... "type": "image",

... "image": "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/bee.jpg",

... },

... {"type": "text", "text": "Describe this image."},

... ],

... },

... ]

>>> pipe = pipeline("image-text-to-text", model="mistralai/Mistral-Small-3.1-24B-Instruct-2503", torch_dtype=torch.bfloat16)

>>> outputs = pipe(text=messages, max_new_tokens=50, return_full_text=False)

>>> outputs[0]["generated_text"]

'The image depicts a vibrant and lush garden scene featuring a variety of wildflowers and plants. The central focus is on a large, pinkish-purple flower, likely a Greater Celandine (Chelidonium majus), with a'

```

### Inference on a single image

This example demonstrates how to perform inference on a single image with the Mistral3 models using chat templates.

```python

>>> from transformers import AutoProcessor, AutoModelForImageTextToText

>>> import torch

>>> torch_device = "cuda"

>>> model_checkpoint = "mistralai/Mistral-Small-3.1-24B-Instruct-2503"

>>> processor = AutoProcessor.from_pretrained(model_checkpoint)

>>> model = AutoModelForImageTextToText.from_pretrained(model_checkpoint, device_map=torch_device, torch_dtype=torch.bfloat16)

>>> messages = [

... {

... "role": "user",

... "content": [

... {"type": "image", "url": "http://images.cocodataset.org/val2017/000000039769.jpg"},

... {"type": "text", "text": "Describe this image"},

... ],

... }

... ]

>>> inputs = processor.apply_chat_template(messages, add_generation_prompt=True, tokenize=True, return_dict=True, return_tensors="pt").to(model.device, dtype=torch.bfloat16)

>>> generate_ids = model.generate(**inputs, max_new_tokens=20)

>>> decoded_output = processor.decode(generate_ids[0, inputs["input_ids"].shape[1] :], skip_special_tokens=True)

>>> decoded_output

"The image depicts two cats lying on a pink blanket. The larger cat, which appears to be an"...

```

### Text-only generation

This example shows how to generate text using the Mistral3 model without providing any image input.

````python

>>> from transformers import AutoProcessor, AutoModelForImageTextToText

>>> import torch

>>> torch_device = "cuda"

>>> model_checkpoint = ".mistralai/Mistral-Small-3.1-24B-Instruct-2503"

>>> processor = AutoProcessor.from_pretrained(model_checkpoint)

>>> model = AutoModelForImageTextToText.from_pretrained(model_checkpoint, device_map=torch_device, torch_dtype=torch.bfloat16)

>>> SYSTEM_PROMPT = "You are a conversational agent that always answers straight to the point, always end your accurate response with an ASCII drawing of a cat."

>>> user_prompt = "Give me 5 non-formal ways to say 'See you later' in French."

>>> messages = [

... {"role": "system", "content": SYSTEM_PROMPT},

... {"role": "user", "content": user_prompt},

... ]

>>> text = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

>>> inputs = processor(text=text, return_tensors="pt").to(0, dtype=torch.float16)

>>> generate_ids = model.generate(**inputs, max_new_tokens=50, do_sample=False)

>>> decoded_output = processor.batch_decode(generate_ids[:, inputs["input_ids"].shape[1] :], skip_special_tokens=True)[0]

>>> print(decoded_output)

"1. À plus tard!

2. Salut, à plus!

3. À toute!

4. À la prochaine!

5. Je me casse, à plus!

```

/\_/\

( o.o )

> ^ <

```"

````

### Batched image and text inputs

Mistral3 models also support batched image and text inputs.

```python

>>> from transformers import AutoProcessor, AutoModelForImageTextToText

>>> import torch

>>> torch_device = "cuda"

>>> model_checkpoint = "mistralai/Mistral-Small-3.1-24B-Instruct-2503"

>>> processor = AutoProcessor.from_pretrained(model_checkpoint)

>>> model = AutoModelForImageTextToText.from_pretrained(model_checkpoint, device_map=torch_device, torch_dtype=torch.bfloat16)

>>> messages = [

... [

... {

... "role": "user",

... "content": [

... {"type": "image", "url": "https://llava-vl.github.io/static/images/view.jpg"},

... {"type": "text", "text": "Write a haiku for this image"},

... ],

... },

... ],

... [

... {

... "role": "user",